News

Apple Unveils Tap to Pay on iPhone for Seamless Contactless Transactions in Singapore

Apple has unfurled its Tap to Pay on iPhone capability across Singapore, ushering in a fresh era where artisans, boutique proprietors, and expansive commercial outfits can metamorphose an iPhone into a refined contactless-payment dais.

Unveiled originally in the United States in February 2022, Tap to Pay empowers the iPhone to apprehend transactions conducted through Apple Pay, tap-enabled credit or debit cards, and an array of digital wallets. Each exchange is shrouded in encryption, leaving Apple bereft of insight into the purchaser’s identity or their acquisitions—much like viewing silhouettes through frosted glass.

The feature demands no extraneous contraptions or card-swiping implements. Instead, it harnesses the iPhone’s embedded NFC architecture to authenticate every contactless gesture with sterile precision. It further accommodates PIN entry, woven with accessibility augmentations for users who require them.

At its inception in Singapore, Tap to Pay harmonizes with Adyen, Fiuu, HitPay, Revolut, Stripe, and Zoho. Apple has divulged that Grab will join the fold early next year, expanding the ecosystem’s orbit.

Requiring an iPhone XS or any lineage beyond it, the experience mirrors a conventional Apple Pay exchange from the customer’s vantage. Merchants simply summon the compatible app, log the sale, and present their device like an outstretched hand. The buyer then completes the transaction with any suitable contactless instrument.

Today, Tap to Pay on iPhone has permeated 50 nations and territories globally, with Apple’s site curating the evolving registry of participating locales.

News

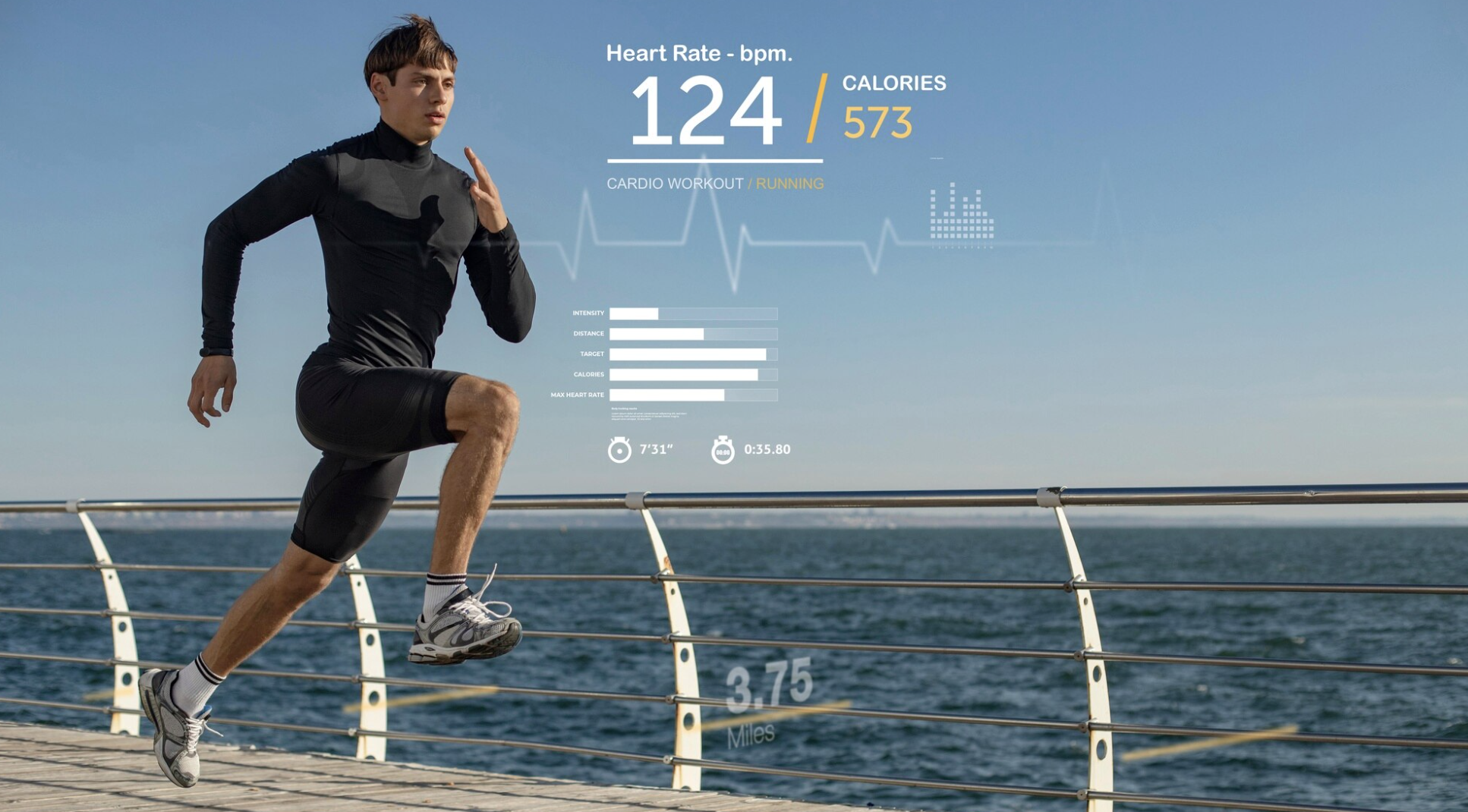

Biomechanical Motion Tracking: The Smart Technology Revolutionizing Sports Performance

Biomechanical motion tracking is changing the way athletes train, compete, and recover. By combining sports science with advanced sensor technology, this innovative system captures how the body moves in real time. Coaches, trainers, and sports scientists now use this powerful tool to analyze movement patterns, prevent injuries, and unlock peak athletic performance.

What Is Biomechanical Motion Tracking?

Biomechanical motion tracking is a technology driven method of recording and analyzing body movements during physical activity. It uses high speed cameras, wearable sensors, and artificial intelligence to measure factors such as joint angles, muscle activation, acceleration, balance, and force.

Unlike traditional video analysis, biomechanical motion tracking delivers precise, data driven insights. It converts complex movements into measurable data that athletes and coaches can understand and improve.

How Biomechanical Motion Tracking Works

Biomechanical motion tracking systems rely on a combination of advanced tools:

- Wearable motion sensors attached to key muscle groups and joints

- Infrared cameras that capture movement from multiple angles

- AI powered software that predicts performance patterns

- Real time analytics that provide instant feedback

These systems collect movement data, process it through machine learning algorithms, and produce detailed visual reports that highlight strengths and weaknesses.

Key Benefits of Biomechanical Motion Tracking

1. Performance Optimization

Athletes can refine their technique by identifying inefficient movements. Biomechanical motion tracking helps optimize running strides, jump mechanics, throwing accuracy, and swing consistency.

2. Injury Prevention

One of the biggest advantages is early injury detection. Subtle movement imbalances and joint overloads are identified before they lead to serious injuries. This allows proactive correction through targeted training programs.

3. Faster Recovery and Rehabilitation

During rehabilitation, biomechanical motion tracking measures how effectively the body is healing. Therapists can design personalized recovery plans based on accurate movement data rather than trial and error.

4. Data Driven Coaching

Coaches gain access to objective performance data. Instead of relying on visual judgment alone, they can make informed decisions backed by science.

Real World Applications in Different Sports

Biomechanical motion tracking is widely used across sports:

- Football and soccer: Improves kicking techniques, sprint speed, and agility

- Cricket and baseball: Enhances bowling and pitching mechanics while reducing arm strain

- Basketball: Optimizes jumping, landing, and shooting form

- Athletics: Refines running gait, stride length, and body posture

This technology adapts to both team sports and individual athletic disciplines.

Challenges and Limitations

While biomechanical motion tracking offers incredible benefits, it also comes with challenges. High end systems can be expensive, and data interpretation requires specialized knowledge. Additionally, ensuring data privacy for athlete performance metrics is becoming increasingly important.

Despite these limitations, rapid technological advancements are making these systems more accessible and user friendly.

The Future of Biomechanical Motion Tracking in Sports

The future of biomechanical motion tracking looks highly advanced. Integration with artificial intelligence, augmented reality, and smart training gear will create even more personalized and immersive training environments. Soon, athletes may receive real time biomechanical feedback directly through smart glasses or wearable displays.

As technology evolves, biomechanical motion tracking will become a standard part of elite and grassroots sports training.

Conclusion

Biomechanical motion tracking is redefining sports science by turning movement into measurable, actionable data. It empowers athletes to train smarter, prevent injuries, and perform at their highest potential. As adoption grows, this technology will continue to shape the future of sports performance worldwide.

News

2026 Apple Glasses: Features, Release Date, Price, and Why They Could Outshine Ray-Ban Meta

The upcoming Apple Glasses (2026) are shaping up to be one of the most anticipated augmented reality products of the decade. Positioned as Apple’s first truly consumer-friendly AR eyewear, these glasses aim to bring smart, unobtrusive technology into everyday life. Unlike the Apple Vision Pro, which focuses on immersive experiences, Apple Glasses are designed for lightweight, practical, all-day augmented reality.

Many analysts believe they could become a direct competitor-and potential replacement-for devices like Ray-Ban Meta Smart Glasses.

Below, we explore everything we know so far: expected features, design, pricing, release window, and Apple’s broader AR strategy.

What Are Apple Glasses? A New Standard for Everyday AR

Apple Glasses are expected to overlay digital information onto the real world without blocking your view. Instead of transporting you into mixed-reality environments, they’re designed to support your day-to-day routine with intelligent, context-aware assistance.

Key AR Functions Rumored for Apple Glasses

- Object recognition: Instantly identify people, objects, landmarks, art pieces, or plants.

- Real-time translation: Translate signs, menus, conversations, and text with a glance.

- Meeting assistance: Generate quick summaries, notes, and reminders during calls or discussions.

- Contextual alerts: Receive subtle notifications that don’t interrupt your field of vision.

These features combine Apple’s advanced AI, Siri integration, and on-device intelligence, turning the glasses into a natural extension of your daily workflow.

Top Features Expected in Apple Glasses (2026)

Apple is reportedly focusing on real usability, not gimmicks. These glasses are built to solve everyday problems-navigation, communication, and productivity-through seamless AR visuals.

1. AR Navigation Made Simple

Turn-by-turn directions could appear directly onto your lenses, helping you walk, drive, or cycle without checking your phone.

2. Built-in Cameras for Visual Lookups

Lightweight cameras may allow:

- Quick photos

- Object detection

- AI-powered visual search

- Instant information about places, art, or products

Just look at an object, and Siri could tell you exactly what it is.

3. Embedded Speakers for Hands-Free Audio

Integrated speakers will support:

- Music playback

- Phone calls

- Real-time translation

- FaceTime with augmented overlays

This feature alone could transform how users handle communication on the go.

4. Lightweight and Stylish Design

Early leaks suggest multiple frame styles, optional color choices, and support for prescription lenses, ensuring comfort for everyday wear.

These features are not about creating a sci-fi gadget-they’re about making AR genuinely practical.

Design, Comfort, and Apple Ecosystem Integration

As expected, Apple will lean heavily on its signature minimalist design. Sleek metal frames, comfortable arms, and interchangeable lenses are all likely.

Deep Integration with Apple Devices

Apple Glasses will reportedly sync effortlessly with:

- iPhone

- Apple Watch

- Mac

- iPad

Some processing power may run through the iPhone-helping the glasses stay light while improving battery performance.

However: This tight integration may limit compatibility for Android users, keeping Apple Glasses exclusive to the Apple ecosystem.

Apple Glasses 2026 Release Date

Based on reliable industry sources, Apple is expected to announce the glasses at WWDC 2026, with a commercial release planned for:

Late 2026 or Early 2027

This timing aligns with Apple’s multi-year AR development roadmap and the company’s push to lead the next generation of wearable technology.

Apple Glasses Price: Under $1,000

One of the biggest surprises is the rumored price tag.

Estimated Price: Below $1,000

This positions Apple Glasses as:

- More affordable than Vision Pro

- A premium alternative to Ray-Ban Meta glasses

- Accessible enough for mainstream consumers

Apple appears to be targeting both AR enthusiasts and general users who want smarter, everyday eyewear.

Apple Glasses vs. Ray-Ban Meta: Will Apple Replace the Competition?

The Ray-Ban Meta Smart Glasses have dominated the lightweight AR/AI eyewear space-but Apple’s entry could disrupt this fast-growing market.

Where Apple May Have the Advantage

- Better integration with iPhone

- More advanced AI and Siri functions

- Stronger privacy and on-device processing

- Sleek Apple-style customizable frames

- A massive App Store ecosystem to build AR experiences

If Apple delivers its promised combination of style, practicality, and AI intelligence, the Apple Glasses could easily become the new standard in consumer AR.

The Future of Everyday Augmented Reality

Apple’s upcoming AR eyewear marks a huge step toward making augmented reality mainstream. Instead of bulky headsets or niche smart glasses, the company is creating something ordinary people can wear comfortably throughout the day.

What Apple Glasses Could Change

- How we navigate cities

- How we translate languages

- How we manage meetings

- How we access information instantly

- How we communicate hands-free

The 2026 Apple Glasses could be the moment AR stops being a futuristic concept and becomes part of everyday life.

Final Thoughts

With a predicted sub-$1,000 price tag, a late-2026 release timeline, and powerful hands-free AI features, the Apple Glasses have the potential to reshape the AR market-and even surpass major competitors like Ray-Ban Meta.

As we approach 2026, expectations are growing. If Apple delivers what leaks suggest, the Apple Glasses may become one of the most influential consumer tech launches of the decade.

News

Deepseek 3.2: The Open-Source AI Model That Outsmarts ChatGPT 5 in Advanced Reasoning Tests

The world of artificial intelligence is changing fast-but the biggest breakthrough of 2025 may not come from a trillion-dollar tech giant. Instead, it arrives from the open-source community. Deepseek 3.2, the latest generation of Deepseek’s AI model family, has shocked researchers by outperforming top proprietary systems like ChatGPT 5 and Gemini 3.0 Pro in rigorous reasoning and problem-solving benchmarks.

What makes this release historic is not only its extraordinary performance, but its complete openness. Deepseek 3.2 is fully open-source under the MIT License, making high-level reasoning capability accessible to researchers, developers, and organizations worldwide-without paywalls.

With gold-medal victories at the International Math Olympiad (IMO) and the International Olympiad in Informatics (IOI), plus innovations like sparse attention and advanced reinforcement learning, Deepseek 3.2 is reshaping what open models can achieve. Let’s explore exactly how it works, why it beats certain closed models, and how it’s redefining the future of accessible AI.

What Is Deepseek 3.2? A New Benchmark for Open-Source AI

Deepseek 3.2 is a next-generation AI model designed for deep reasoning, mathematical problem-solving, and complex multi-step tasks. Unlike many open models that serve as lightweight alternatives to commercial models, Deepseek 3.2 directly challenges industry leaders-and in many cases exceeds them.

Why Deepseek 3.2 Matters

- It is the first open-source AI model to win gold at both IMO and IOI.

- It surpasses GPT-5 and Gemini 3.0 Pro in certain critical reasoning benchmarks.

- It introduces new architecture innovations, including Deepseek Sparse Attention (DSA).

- It is fully open-source, allowing unrestricted global access.

For researchers, startups, and engineers, Deepseek 3.2 proves that open models can match or surpass the world’s most advanced proprietary systems.

Deepseek 3.2: Quick Highlights & Key Takeaways

TL;DR Why Deepseek 3.2 Stands Out

- Dual gold medals at IMO & IOI-a rare achievement demonstrating elite reasoning ability.

- Outperforms ChatGPT 5 and Gemini 3.0 Pro in advanced reasoning, multi-step tasks, and logic benchmarks.

- Deepseek Sparse Attention (DSA) provides efficient long-context processing with near-linear scaling.

- Reinforcement learning and agentic task synthesis dramatically boost task generalization and tool use.

- Open-source MIT license, making it one of the most accessible high-performing models ever released.

- 671B total parameters, but only 37B active during inference, making deployment far more efficient.

These strengths make Deepseek 3.2 a top contender in AI research-and a model developers can build on without restrictions.

Major Achievements of Deepseek 3.2

Deepseek 3.2’s achievements go far beyond raw benchmarks. They highlight a model that can think, reason, plan, and solve problems at a level once reserved for closed, proprietary systems.

1. Gold Medals at IMO and IOI

These competitions involve:

- high-level mathematics

- algorithmic thinking

- multistep logic

- complex reasoning under pressure

Deepseek 3.2 handled these challenges with precision-proof of its powerful internal reasoning mechanisms.

2. Beats GPT-5 and Gemini 3.0 Pro in Multi-Step Reasoning

While GPT-5 excels in creative generation and conversation, Deepseek 3.2 demonstrates superior performance in:

- mathematical reasoning

- coding-based logic

- structured problem solving

- chain-of-thought benchmarks

In these categories, Deepseek 3.2 consistently scores higher.

3. Available in Two Versions

- Standard Version – Balanced for everyday use

- Special Version – Optimized for ultra-difficult reasoning tasks

Users can choose the ideal version based on their resource and accuracy needs.

Core Innovations That Power Deepseek 3.2

Deepseek 3.2 introduces several advanced techniques that drive its exceptional performance.

1. Deepseek Sparse Attention (DSA): Faster, Smarter, More Efficient

Traditional attention mechanisms scale quadratically-slowing models down as context grows.

Deepseek 3.2 solves this with Sparse Attention, which:

- enables near-linear scaling

- reduces computational cost

- supports longer context windows

- speeds up inference

- lowers VRAM requirements

This innovation alone makes Deepseek 3.2 incredibly efficient for large-scale applications.

2. Reinforcement Learning for Superior Generalization

Deepseek dedicates over 10% of its compute to post-training reinforcement learning-a much higher ratio than most models.

This improves:

- instruction-following

- decision-making

- reasoning accuracy

- task generalization across unfamiliar inputs

This RL-focused approach elevates Deepseek’s adaptability and reliability.

3. Agentic Task Synthesis Pipeline

Deepseek 3.2 trains on:

- 1,800 environments

- 85,000 complex agentic tasks

These environments mimic real-world reasoning situations, teaching the model to:

- plan ahead

- use tools effectively

- solve multi-step logical problems

- navigate complex task chains

This makes Deepseek 3.2 particularly powerful for automation, analysis, and agent-based AI systems.

Technical Breakdown: Specs That Redefine Scalability

Deepseek 3.2 strikes a rare balance between enormous capacity and efficient inference.

Technical Specifications

- 671 billion parameters total

- 37 billion active parameters during inference

- FP8 precision mode: 700 GB VRAM

- BF16 precision mode: 1.3 TB VRAM

- Distributed training optimized

- Fully open-source under MIT License

Despite its massive scale, Deepseek 3.2 remains far more efficient than competing models with similar capabilities.

Closing the Tool-Use Gap Between Open and Closed Models

One of the hardest challenges in AI is effective tool use-invoking APIs, using calculators, coding tools, and external resources.

Deepseek 3.2 excels in:

- decision-making

- tool-call accuracy

- long-chain planning

- function calling

- agent-style reasoning

This allows it to rival closed systems that traditionally dominate in tool-assisted workflows.

Scalability, Cost Efficiency, and Accessibility

Unlike closed corporate models, Deepseek 3.2 is:

- free to use

- free to modify

- free to distribute

This dramatically lowers the barrier to entry for:

- universities

- independent researchers

- small businesses

- AI startups

- global developers

With open weights and transparent training, Deepseek 3.2 empowers innovation at every level.

Deepseek 3.2: A Milestone in Open-Source AI Innovation

Deepseek 3.2 stands as one of the most significant open-source AI achievements of the decade. Its blend of reasoning power, efficiency, and unrestricted access challenges the dominance of closed models like ChatGPT 5 and Gemini 3.0 Pro.

By pioneering advances such as sparse attention, powerful reinforcement learning pipelines, and extensive agentic training, Deepseek 3.2 sets a new standard for open models-and paves the way for a more democratic AI future.

The message is clear:

The future of AI is open, powerful, and accessible to everyone.

-

Blog4 months ago

Wedding day horror: Groom falls to death at VOCO Orchard Hotel on Wedding Day

-

Technology3 months ago

Technology3 months agoEdTech in Singapore: 150+ Companies Driving $180M Digital Learning Revolution

-

Education6 months ago

Education6 months agoSingapore JC Ranking: A Complete Guide for Students

-

Food2 months ago

Food2 months agoYi Dian Dian Bubble Tea Singapore: Menu, Outlets & Try Drinks

-

Blog6 months ago

Blog6 months agoBest of SG with Singapore Rediscover Voucher

-

Business6 months ago

Business6 months agoWhat to Expect During PSLE Marking Days 2023

-

Digital Marketing3 months ago

Digital Marketing3 months agoHow to Run Effective Xiaohongshu Advertising Campaigns

-

Digital Marketing3 months ago

Digital Marketing3 months agoWhy Xiaohongshu Ads Are a Must for Reaching Chinese Consumers